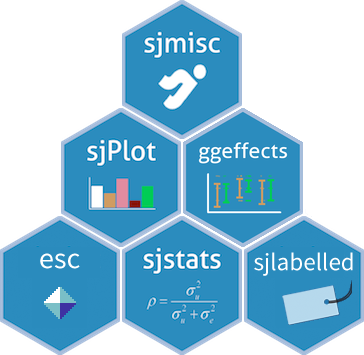

I’m pleased to announce an update for the sjmisc-package, which was just released on CRAN. Here I want to point out two important changes in the package.

New default option for recoding and transformation functions

First, a small change in the code with major impact on the workflow, as it affects argument defaults and is likely to break your existing code – if you’re using sjmisc: The append-argument in recode and transformation functions like rec(), dicho(), split_var(), group_var(), center(), std(), recode_to(), row_sums(), row_count(), col_count() and row_means() now defaults to TRUE.

The reason behind this change is that, in my experience and workflow, when transforming or recoding variables, I typically want to add these new variables to an existing data frame by default. Especially in a pipe-workflow, when I start my scripts with importing and basic tidying of my data, I almost always want to append the recoded variables to my existing data, e.g.:

# Example with following steps:

# 1. loading labelled data set

# 2. dropping unused labels

# 3. converting numeric into categorical, using labels as levels

# 4. center some variables

# 5. recode some other variables

data %>%

drop_labels() %>%

as_label(var1:var5) %>%

center(var7, var9) %>%

rec(var11, rec = "2=0;1=1;else=copy")

Weiterlesen „Data transformation in #tidyverse style: package sjmisc updated #rstats“ →

Gefällt mir Wird geladen …