I’m pleased to anounce an update of my sjPlot-package, a package for Data Visualization for Statistics in Social Science. Thanks to the help of Alexander, it is now possible to create grouped Likert-plots. This is what I want to show in this post…

Weiterlesen „Likert-plots and grouped Likert-plots #rstats“Schlagwort: data visualization

ggeffects 0.8.0 now on CRAN: marginal effects for regression models #rstats

I’m happy to announce that version 0.8.0 of my ggeffects-package is on CRAN now. The update has fixed some bugs from the previous version and comes along with many new features or improvements. One major part that was addressed in the latest version are fixed and improvements for mixed models, especially zero-inflated mixed models (fitted with the glmmTMB-package).

In this post, I want to demonstrate the different options to calculate and visualize marginal effects from mixed models.

Weiterlesen „ggeffects 0.8.0 now on CRAN: marginal effects for regression models #rstats“

Marginal Effects for (mixed effects) regression models #rstats

ggeffects (CRAN, website) is a package that computes marginal effects at the mean (MEMs) or representative values (MERs) for many different models, including mixed effects or Bayesian models. One of the advantages of the package is its easy-to-use interface: No matter if you fit a simple or complex model, with interactions or splines, the function call is always the same. This also holds true for the returned output, which is always a data frame with the same, consistent column names.

The past package-update introduced some new features I wanted to describe here: a revised print()-method as well as a new opportunity to plot marginal effects at different levels of random effects in mixed models…

Weiterlesen „Marginal Effects for (mixed effects) regression models #rstats“

Marginal Effects for Regression Models in R #rstats #dataviz

Regression coefficients are typically presented as tables that are easy to understand. Sometimes, estimates are difficult to interpret. This is especially true for interaction or transformed terms (quadratic or cubic terms, polynomials, splines), in particular for more complex models. In such cases, coefficients are no longer interpretable in a direct way and marginal effects are far easier to understand. Specifically, the visualization of marginal effects makes it possible to intuitively get the idea of how predictors and outcome are associated, even for complex models.

The ggeffects-package (Lüdecke 2018) aims at easily calculating marginal effects for a broad range of different regression models, beginning with classical models fitted with

The ggeffects-package (Lüdecke 2018) aims at easily calculating marginal effects for a broad range of different regression models, beginning with classical models fitted with lm() or glm() to complex mixed models fitted with lme4 and glmmTMB or even Bayesian models from brms and rstanarm. The goal of the ggeffects-package is to provide a simple, user-friendly interface to calculate marginal effects, which is mainly achieved by one function: ggpredict(). Independent from the type of regression model, the output is always the same, a data frame with a consistent structure.

Weiterlesen „Marginal Effects for Regression Models in R #rstats #dataviz“

„One function to rule them all“ – visualization of regression models in #rstats w/ #sjPlot

I’m pleased to announce the latest update from my sjPlot-package on CRAN. Beside some bug fixes and minor new features, the major update is a new function, plot_model(), which is both an enhancement and replacement of sjp.lm(), sjp.glm(), sjp.lmer(), sjp.glmer() and sjp.int(). The latter functions will become deprecated in the next updates and removed somewhen in the future.

plot_model() is a „generic“ plot function that accepts many model-objects, like lm, glm, lme, lmerMod etc. It offers various plotting types, like estimates/coefficient plots (aka forest or dot-whisker plots), marginal effect plots and plotting interaction terms, and sort of diagnostic plots.

In this blog post, I want to describe how to plot estimates as forest plots.

More support for Bayesian analysis in the sj!-packages #rstats #rstan #brms

Another quick preview of my R-packages, especially sjPlot, which now also support brmsfit-objects from the great brms-package. To demonstrate the new features, I load all my „core“-packages at once, using the strengejacke-package, which is only available from GitHub. This package simply loads four packages (sjlabelled, sjmisc, sjstats and sjPlot).

Weiterlesen „More support for Bayesian analysis in the sj!-packages #rstats #rstan #brms“

Quick #sjPlot status update… #rstats #rstanarm #ggplot2

I’m working on the next update of my sjPlot-package, which will get a generic plot_model() method, which plots any kind of regression model, with different plot types being supported (forest plots for estimates, marginal effects and predictions, including displaying interaction terms, …).

The package also supports rstan resp. rstanarm models. Since these are typically presented in a slightly different way (e.g., „outer“ and „inner“ probability of credible intervals), I implemented a special handling for these models, for which I wanted to show a quick preview here:

Weiterlesen „Quick #sjPlot status update… #rstats #rstanarm #ggplot2“

Marginal effects for negative binomial mixed effects models (glmer.nb and glmmTMB) #rstats

Here’s a small preview of forthcoming features in the ggeffects-package, which are already available in the GitHub-version: For marginal effects from models fitted with glmmTMB() or glmer() resp. glmer.nb(), confidence intervals are now also computed.

If you want to test these features, simply install the package from GitHub:

library(devtools)

devtools::install_github("strengejacke/ggeffects")Here are three examples:

Going Bayes #rstats

Some time ago I started working with Bayesian methods, using the great rstanarm-package. Beside the fantastic package-vignettes, and books like Statistical Rethinking or Doing Bayesion Data Analysis, I also found the ressources from Tristan Mahr helpful to both better understand Bayesian analysis and rstanarm. This motivated me to implement tools for Bayesian analysis into my packages, as well.

Due to the latest tidyr-update, I had to update some of my packages, in order to make them work again, so – beside some other features – some Bayes-stuff is now avaible in my packages on CRAN.

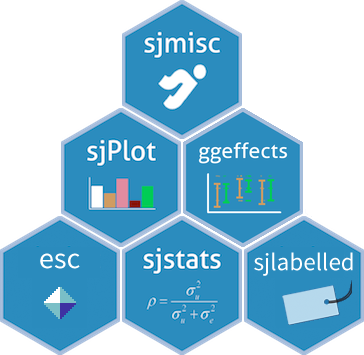

My set of packages for (daily) data analysis #rstats

I started writing my first package as collection of various functions that I needed for (almost) daily work. Meanwhile, packages were growing and bit by bit I sourced out functions to put them into new packages. Although this means more work for CRAN members when they have more packages to manage on their network, from a user-perspective it is much better if packages have a clear focus and a well defined set of functions. That’s why I now released a new package on CRAN, sjlabelled, which contains all functions that deal with labelled data. These functions use to live in the sjmisc-package, where they now are deprecated and will be removed in a future update.

My aim is not only to provide packages with a clear focus, but also with a consistent design and philosophy, making it easier and more intuitive to use (see also here) – I prefer to follow the so called „tidyverse“-approach here. It is still work in progress, but so far I think I’m on a good way…

So, what are the packages I use for (almost) daily work?

- sjlabelled – reading, writing and working with labelled data (especially since I collaborate a lot with people who use Stata or SPSS)

- sjmisc – data and variable transformation utilities (the complement to dplyr and tidyr, when it comes down from data frames to variables within the data wrangling process)

- sjstats – out-of-the-box statistics that is not already provided by base R or packages

- sjPlot – to quickly generate tables and plot

- ggeffects – to visualize regression models

The next step is creating cheat sheets for my packages. I think if you can map the scope and idea of your package (functions) on a cheat sheet, its focus is probably well defined.

Btw, if you also use some of the above packages more or less regularly, you can install the „strengejacke“-package to load them all in one step. This package is not on CRAN, because its only purpose is to load other packages.

Disclaimer: Of course I use other packages everyday as well – this posting is just focussing on my packages that I created because I frequently needed these kind of functions.